Blog

How I joined the bug 323 community

This is the story of bug that makes you want to tear your hair out. The kind of bug where you end up thinking: “But that's not possible, the compiler is screwing up, no way it's something else!”

And a compiler bug is no small thing: in 12 years of C++ programming, I've found (and reported) just... one. And I can tell you that before reporting the bug to GCC, I tested/retested/checked in every possible way to make sure I wasn't going to look like an idiot.

Anyway. Here's the story.

The discovery

It's April 2023. With the game scheduled for release on May 15, 2023, I'm in a fairly intense period of work to try and meet the deadline. I'm mainly working on the “artistic” part (drawings, music), but sometimes I still add/correct little things in the code.

At the time, out of habit, I compile the Windows version with the 32-bit variant of MinGW: the inertia of older versions of Windows that didn't support 64-bit architecture had given me this habit, which, in reality, is no longer really necessary. Just as I was about to test the game on Windows, suddenly... a bug. A crash, pure and simple. Ah.

I compare the execution of the program with the GNU/Linux version and I quickly find where it crashes: when I compute the vaunted “floor graph” to represent the places in the room where the characters can walk, there's a discrepancy in the execution.

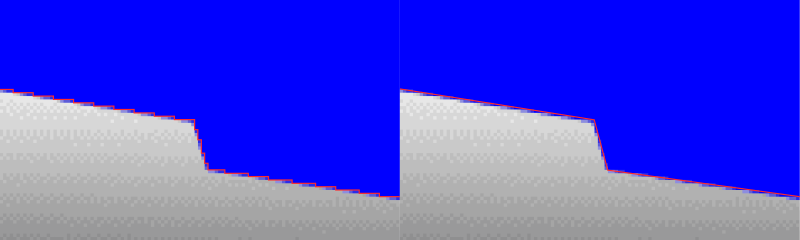

To put it simply, at this point I create the ground contour represented by a graph from the pixel values. To do this, I start by representing the edges of the ground with a rather complex polyline that follows the pixels, then run a polyline simplification algo.

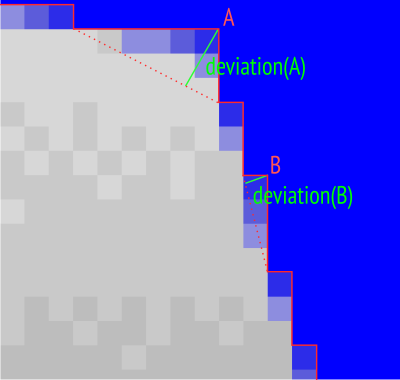

This algorithm uses a priority queue: each vertex is inserted into it, and sorted according to its “deviation”, i.e. the distance between that vertex and the segment joining its two neighboring vertices. The vertices with the smallest “deviation” are those which will induce the smallest “distortion” of the polyline if we delete them, so we'll start by deleting those.

When we can no longer remove a vertex without inducing distortion above a certain threshold, we stop: in practice, this allows us to quickly find a simple polyline that remains fairly close to the edges of the ground.

Well, I realize that, for some strange reason, the algorithm on Windows starts to diverge from the one on GNU/Linux after a few iterations (it deletes a different vertex and ends up deleting vertices that aren't supposed to be deleted, hence the crash). Which is frankly bizarre, as the algorithm is deterministic: give it the same input image, and it must return exactly the same polyline after deleting exactly the same vertices in exactly the same order.

At the time, I insulted Windows/MinGW a little because, after reading, re-reading and re-checking my code, I suspected a compiler bug. But then, after realizing a major difference – my MinGW was 32-bit when my GCC on Gnunux was 64-bit – I try to compile my code on GNU/Linux but in 32-bit... and boom. The bug appears. I try again with Clang. Same bug.

A bug that appears on several compilers can't be a compiler bug, I figure. Haha. I had no idea...

The heart of the bug

My priority queue is in fact represented by a std::set (a set of

unique elements that are kept sorted), which allows me to remove

elements that are not at the beginning of the queue. The subtlety is

that we're going to give an adapted comparison function to the set.

The comparison is first of all the deviation I was talking about: when

we insert a vertex into the set, we want the vertices with the

smallest deviation to be placed at the beginning of the set. So

let's do this:

bool operator() (const GVertex& a, const GVertex& b)

{

double da = deviation(a);

double db = deviation(b);

return da < db;

});

But beware, danger! Two vertices can have the same deviation, which,

in a std::set that ensures element unicity, means that only the

first vertex would be inserted in the priority queue. Obviously, we

want to be able to have two vertices with the same deviation in the

priority queue. In this case, of course, we don't care which vertex is

deleted first, so we'll simply take the first vertex of the graph (the

vertices are compared according to their indices), just to keep things

deterministic.

The function thus becomes:

bool operator() (const GVertex& a, const GVertex& b)

{

double da = deviation(a);

double db = deviation(b);

if (da == db)

return a < b;

return da < db;

});

I quickly realize that the problem lays with this function: at some

point, the if() returns true in 64-bit and false in 32-bit.

Aparté about equality tests on doubles

Here, I have to make an aparté. I had the bad idea to share this piece of code online. You wouldn't believe the number of comments I got, basically saying:

Ah, but of course you're doing an equality test on doubles! Never do that! That's where the problem lies!

Pchhh, never EVER test the equality of doubles: if you want to compare, you compare the difference with a small tolerance!

Two doubles are never equal! Of course, if you do an equality test on doubles, you're asking for trouble.

The list goes on.

Well.

I was a little annoyed to find myself replying to 15 messages from people who had simply learnt “double equality = danger” and who were throwing it at me without having bothered to try and figure out what the code was doing. And without thinking that maybe I know a little bit about it and that, NO, it didn't come from there.

For the record, before becoming a video game developer, I did a thesis in computational geometry, then worked for 6 years in a company that developed computational geometry software. In particular, I used libraries such as MPFR, which enable multi-precision computations to avoid double-related problems when computing on real numbers. Please believe me when I say that I know what I'm doing when I compare doubles.

Anyway. If you, too, saw the equality test on doubles and thought “lol, noob, of course equality tests on doubles don't work”, I'd like to set the record straight:

-

An equality test on doubles is valid, it's not taboo, it's not Voldemort, you have the right to write it, the world won't end if you do it, you are allowed to do it. Provided you know what you're doing, of course.

-

The equality test on doubles is problematic only if you think you're testing equality between real numbers (in the mathematical sense). Indeed, do the test: (2.01-1.01) is not equal to 1.00 with doubles, because finite precision means that a different representation of a single real number can give slightly different values. On the other hand (and I insist on this point): (2.01-1.01) is guaranteed to be equal to (2.01-1.01); 1.00 is guaranteed to be equal to 1.00; yes, EVEN with doubles. What I mean is that if you have two doubles that have the same value (the same value in the computer sense, with the same bits in the same bytes), that have been computed in the exact same way, then the equality test between these two doubles will return TRUE. And thank goodness for that!

-

In my case, it's even simpler: we're not interested in the case where the two doubles are equal, it's just a special case we've set up to be safe, because in the case where the doubles are different, it's even simpler. Let's imagine that you're right and that “two doubles are never equal” (which is completely false, of course): in that case, there's no problem, because the

ifwill never be true and the algorithm will always use deviation as a priority. In practice, as polylines come from regular pixels, obviously there will be many equal deviations... -

Using a tolerance? Sorry, but you must not have read the code, or understood it at all. If, instead of comparing

daanddb, I doif(std::fabs(da-db)<epsilon), what happens? Well, if two vertices have a close deviation, instead of sorting them by deviation, we'll sort them by their indices. GREAT. What's the point, apart from making the algorithm less optimal?

Anyway. It's very good to always be careful with double comparisons. But it's better if you understand exactly why and in which cases. It would also be nice to make sure you've understood the code before commenting on tips that you literally learn in your first year of a programming course.

Aparté finished.

Giving up

I literally spend a day working on this bug. I'm in a rush to finish the game. I'm stressed, and no matter how I look at the problem, I just can't figure it out. Let alone fix it. Well, I can. I can fix it in several ways that make no sense at all:

- By disabling compiler optimizations

- By adding a

std::cerrto comparisons (Schrödinger's bug: it disappears when you observe it) - By prestoring deviation values instead of calculating them on the fly (no, no, they do not vary, I checked)

- A whole bunch of other weird stuff (declaring another variable in the middle of the comparison, for example).

In short. On 64-bit? No problem. On 32-bit unoptimized? No problem. On 32-bit optimized? Boom. Error.

I come to a point where, in the debugger, I literally see the program do this:

- Evaluate the equality between doubles as false (which is an error)

- Do not enter

if() - Then evaluate the inequality between doubles as false (which is exact but thus contradicts point 1.)

It REEKS of a compiler bug. Except that, as I said, I've got the error on both GCC and Clang. Two different compilers with the same bug? I don't believe it.

I lost a day and didn't make any progress. What's more, for the record, this bug only appears in the development version of the game (and in the 32-bit version that I won't be distributing after all): in the version distributed to the public, this vaunted ground graph is pre-computed. So, in the big picture, this bug won't affect anyone, but it annoys me enormously not to understand.

Still, I end up throwing in the towel: I've wasted too much time on this, I've got to move on to finish my game. I make a mental note to myself to come back to it later, after the release.

Coming back to it

I met the deadline, and the game was released on May 15 (check it out, it's cool).

Fast-forward to yesterday: it's mid-August, it's quiet, I'm having a bit of a slump and I'm thinking I'll dive back into this bug, with a clear head.

The big problem with the bug is that it's in the middle of a very large program (the game engine), with lots of complicated files and dependencies. The reflex, in this case, is to isolate the buggy part as much as possible, to make sure that the bug doesn't come from something much more twisted (for example, a memory leak somewhere in the game that would write nonsense in the priority queue).

I'm working on it:

- I take the graph computation out of the game engine: we load the image and do only the graph computation, removing useless dependencies (LZ4, YAML)

- I reduce the image as much as possible to a small graph

- I end up hardcoding the graph in question (I manage to reproduce the bug with 16 vertices instead of 3900), no longer needing to load the image, I remove the dependency to SDL

- I get rid of my geometry mini-library, keeping only two or three useful functions.

- I end up reducing the priority queue as much as possible, with an error as soon as the second vertex is inserted.

Finally, I end up with a 50-line program with no dependencies other than the STL, which triggers the bug. Here it is:

#include <array>

#include <cmath>

#include <iostream>

#include <set>

using double2 = std::array<double, 2>;

struct Comparator

{

static double deviation (double2 p)

{

const double2 p0 { 1, 0 };

const double2 vp0p1 { -1 / std::sqrt(2), 1 / std::sqrt(2) };

const double2 vp0p { p[0] - 1, p[1]};

const double dotprod = vp0p1[0] * vp0p[0] + vp0p1[1] * vp0p[1];

const double2 proj { 1 + dotprod * vp0p1[0], p0[1] + dotprod * vp0p1[1] };

return std::sqrt ((proj[0] - p[0]) * (proj[0] - p[0]) +

(proj[1] - p[1]) * (proj[1] - p[1]));

}

bool operator() (const double2& a, const double2& b) const

{

const double da = deviation(a);

const double db = deviation(b);

if (da == db)

return a < b;

return da < db;

}

};

void insert (std::set<double2, Comparator>& set, double2 point)

{

const double deviation = Comparator::deviation(point);

std::cerr << "Inserting " << std::defaultfloat << point[0] << " " << point[1]

<< " with deviation = " << deviation << " / hex="

<< std::hexfloat << deviation << std::endl;

if (set.insert (point).second)

std::cerr << " -> Success" << std::endl;

else

std::cerr << " -> Failure" << std::endl;

}

int main (int, char**)

{

std::cerr.precision(18);

std::set<double2, Comparator> set;

insert(set, { 0, 0 });

insert(set, { 1, 1 });

return EXIT_SUCCESS;

}

Basically, this program inserts two points into a std::set with the

comparison function: these two points are different ((0,0) and

(1,1)) but have the same deviation value (equal to the root of two

divided by two).

Compile this program in 64-bit mode and the output will be:

Inserting 0 0 with deviation = 0.707106781186547573 / hex=0x1.6a09e667f3bcdp-1

-> Success

Inserting 1 1 with deviation = 0.707106781186547573 / hex=0x1.6a09e667f3bcdp-1

-> Success

This is indeed the expected result.

Compile it in 32 bits with at least -O1 enabled (-O2 if you're

using Clang) and you'll get:

Inserting 0 0 with deviation = 0.707106781186547573 / hex=0x1.6a09e667f3bcdp-1

-> Success

Inserting 1 1 with deviation = 0.707106781186547573 / hex=0x1.6a09e667f3bcdp-1

-> Failure

The second point is not inserted, there's a bug.

It's crystal clear.

At that point, I'm a lot more confident about the “my code is correct, it's a compiler bug” side of things. And I've clearly identified what is being screwed up.

I then search for “c++ O1 optimization double comparison bug branch”. And the answer appears.

The infamous bug 323 of GCC

GCC bug number 323, entitled “optimized code gives strange floating point results”, dates back to June 14, 2000. At the time of writing, it has 229 comments, 101 of which refer to a “duplicate” (another bug that someone else has posted, but which is in fact the same as this one). That sets up the mood.

If this bug has been around for so long, it's because, contrary to appearances, it's not really a compiler bug: it's a processor bug. Yes, that's right.

More precisely, it's a behavior of the FPU (Floating-Point Unit) that causes unexpected behavior when the code is optimized.

The idea is that computations on floating-point numbers can be done

with different accuracies: in RAM, float numbers have a precision of

32 bits, the famous double have a precision of 64 bits (double

precision, indeed)... and computations in the FPU use the registers of

the processor with a precision of 80 bits. This extra precision during

a computation minimizes the rounding error.

So what happens in my code? Well, when the code is not optimized, the

deviation value is computed by the FPU on 80 bits (in the processor

registers) for each of the two vertices, then stored in a 64-bit

double variable in RAM (and therefore rounded off, as precision is

reduced) for each of the two vertices. All's well.

But when the code is optimized, the compiler realizes that when it comes to computing the second deviation, there's no point in putting it in RAM (which is costly in terms of runtime), since it will be used again immediately: it might as well be kept in a processor register and reused directly. Except... it won't have been converted to 64 bits and will thus have a higher precision than the deviation of the first vertex. And thus a different value. And boom, two values that should be equal become different, because one has been rounded to 64 bits and the other has not.

This explains why the bug disappears if we do seemingly unrelated

things in the code (declare another variable, add a std::cerr,

etc.): by making the processor do something else in the meantime, we

simply force the second deviation to be stored in RAM, which solves

the problem.

Obviously, this behavior has been corrected in subsequent generations of FPUs to comply with the IEEE-754 standard, which stipulates, among other things, that a floating-point computation must be deterministic. But since it's a problem at the heart of the processor, the bug can be found in both GCC and Clang (as well as in others, probably). And this famous bug 323 continues to be regularly commented on and duplicated, as it is not strictly speaking a GCC bug.

I still pretty much agree with this comment :

Calling a trivial function twice with an identical parameter may return a value less than, equal to or greater than itself (depending on register allocation in the context of the calls). This is a really nasty piece of nondeterministic behaviour. Weren't compilers and "high level" languages invented to get around exactly this kind of hardware dependency?

And finally, a little comment that made me laugh because I had the feeling they were talking directly to me:

I'd like to welcome the newest members of the bug 323 community, where all x87 floating point errors in gcc come to die! All floating point errors that use the x87 are welcome, despite the fact that many of them are easily fixable, and many are not! We're all one happy family, making the egregious mistake of wanting accuracy out of the most accurate general purpose FPU on the market!

How do we fix this?

Now that we've figured out why this behavior can happen, we have a practical question: how do we prevent our code from triggering this behavior?

Well, you've got the hard way out: you can use the -ffloat-store

option at compile time. This option literally forces the compiler to

store all floats in RAM (thus making the above error impossible). So,

sure, it solves the bug, but it's also a bit of a shame as it

neutralizes a whole bunch of potentially interesting optimizations

(not storing floats in RAM when it's not needed is very advantageous

and safe in 99% of cases).

A much more refined way (because you can integrate it only in potentially problematic places in your code, typically on double equality tests) is to use the "volatile" keyword. To quote Wikipedia, “this keyword prevents an optimizing compiler from optimizing away subsequent reads or writes”. Quite simply: optimizations are deactivated locally, not globally.

All we have to do is modify our comparison function as follows:

bool operator() (const double2& a, const double2& b) const

{

const volatile double da = deviation(a);

const volatile double db = deviation(b);

if (da == db)

return a < b;

return da < db;

}

And the bug disappears. You can try it out: the code now works even in 32-bit mode with the optimizations.

VICTORY!

Addendum (September 3, 2023)

Well, this article got a bit out of hand, and I saw quite a few people react by saying: “ah, but in the end, it was the right thing to do to be wary of equality tests on doubles, you see, we were right!”

So, if that's your conclusion, I invite you to read the article again. The solution to my problem isn't to avoid equality tests on doubles, it's to disable optimizations, that's very different.

If you tell me “don't cross the road, you might get hit by a unicorn”, and I cross the road and get hit by a car, you weren't right. So yes, if I'd listened to you, I wouldn't have had a problem, but that would have implied a lot of other problematic stuff. In this case, we need to do equality tests on doubles, otherwise there's a bunch of scientific libraries that simply can't exist any more.

I insist, but we're talking about two behaviors of floating-point numbers that have nothing to do with each other:

-

the fact that (2.01-1.01) is not equal to 1.00 in doubles is a correct and expected result; it complies to the C++ standard; if you learn to use floating-point numbers properly, it's clear and logical; it will happen with any implementation of floating-point numbers, on any architecture;

-

the fact that a single test gives true at one point in the code and then false is a bug; it's not an expected result; it's not logical; it only happens with a certain architecture and with a certain level of optimization; even if you've read the C++ standard, it's not something you can expect.

And if you start coding avoiding certain things on the assumption that there might be a problem with the processor architecture, or that something as simple as an equality test might become non-deterministic, you can just stop programming right away.

By the way, this bug isn't limited to equality tests, so fixing it by replacing the equality test with a comparison test with an epsilon doesn't prevent you from it at all: your comparison can just as easily return false then true.

Anyway, I'll stop here, but let's be square on the problem and the solution: here, it's an optimization problem, so we disable optimizations (via the volatile keyword). Simple, right?

Conclusion

I thought compiler bugs were the worst thing that could happen in programming (because the last thing you'd suspect): I was wrong, processor bugs are worse.

Despite all the hours wasted on this bug, I'm glad of one thing: I was

persistent enough to finally figure out what was going on. And the

icing on the cake is a simple and optimal fix (the use of volatile).

If I had to conclude with a word of advice: when you encounter a bug, never give up until you've really got to the bottom of the problem (well, provided you have the time to do it, which is often the limiting parameter). Often, you'll understand a real problem in your code; sometimes, you'll unexpectedly learn a lot about processor optimization, and it doesn't happen every day.